Orochi Network: Verifiable Data Infrastructure

Challenges

Data Integrity

In a truly decentralized Web3 ecosystem, data integrity would be ensured through a distributed network of aggregators. Each node could independently prove the correctness of data using cryptographic protocols.

However, many Web3 solutions today still rely on oracles, which are fundamentally flawed and unable to guarantee data authenticity. Consequently, smart contracts are often unable to confirm the legitimacy of third-party provided data. This vulnerability leads to potential losses and fraudulent activities.

Data Availability

Smart contracts run in an isolated environment, such as the EVM or WASM runtime on the blockchain. While this isolation allow smart contract to be executed seamlessly regardless the differences of the architecture, it also limits their ability to directly interact with external data sources, like those in the real world.

Additionally, as the number of users and transactions on a blockchain network grows, storing and accessing all data on-chain becomes increasingly impractical and costly.

Interoperability

Interoperability is a critical aspect of any decentralized Web3 ecosystem. However, many existing solutions struggle with interoperability due to the lack of standardization and compatibility between different architectures.

Existing DA Layer solution is just a combination of blockchain and commitment schemes and it is failed to prove the DA state to on-chain contracts in a single succinct proof.

Scalability

Nowadays DA Layers are leveraging existing technical stack that mean they are also inherits issues of existing blockchains, namely finality and scalability. They can not deallocate resource that store on their system and unable to reach instant finality with BFT consensus.

Our Solutions

Orochi Network: Verifiable Data Infrastructure

Orochi Network positions as the first Verifiable Data Infrastructure, emphasizing the use of ZKPs for secure and verifiable data processing. This focus on ZKPs caters to applications, platforms requiring high levels of privacy, security and decentralized. Here's a breakdown of our key features and potential of our ZKP centric approach:

- Native ZK-data-rollups: Unlike other DA Layers, Orochi Network natively supports ZKPs and perform the rollups on the data. This allows for efficient on-chain verification of data with one single succinct proof, this approach potentially improving scalability and privacy for decentralized applications.

- Verifiable Data Pipeline: Orochi Network goes beyond just data availability. We offers cryptographic proofs at every step of data processing – from sampling to storage and retrieval. Our solution is only reply on cryptography protocols that helps to take down third party trust and helping to transform Real World Data to Provable Data which can be read and verified by smart contracts.

- Utilizes Merkle Directed Acyclic Graph (Merkle DAG): Orochi Network leverages Merkle DAG technology, potentially offering advantages over traditional blockchain structures in terms of scalability and performance.

- Succinct Hybrid aBFT Consensus: This consensus mechanism allows for asynchronous finalization of states, potentially improving efficiency compared to synchronous approaches used by some competitors.

- Proof-System Agnostic: Orochi Network can work with various ZKP systems like Plonky3, Halo2, Nova, and Pickles, offering developers flexibility in choosing the most suitable proof system for their needs.

- Blockchain Agnostic: Orochi Network is designed to be blockchain-agnostic by leveraging ZKPs to improve interoperability between different blockchains, potentially enabling integration with diverse blockchain platforms.

Overview

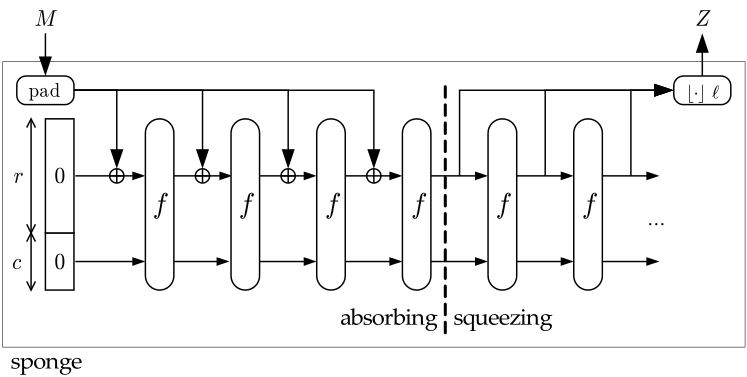

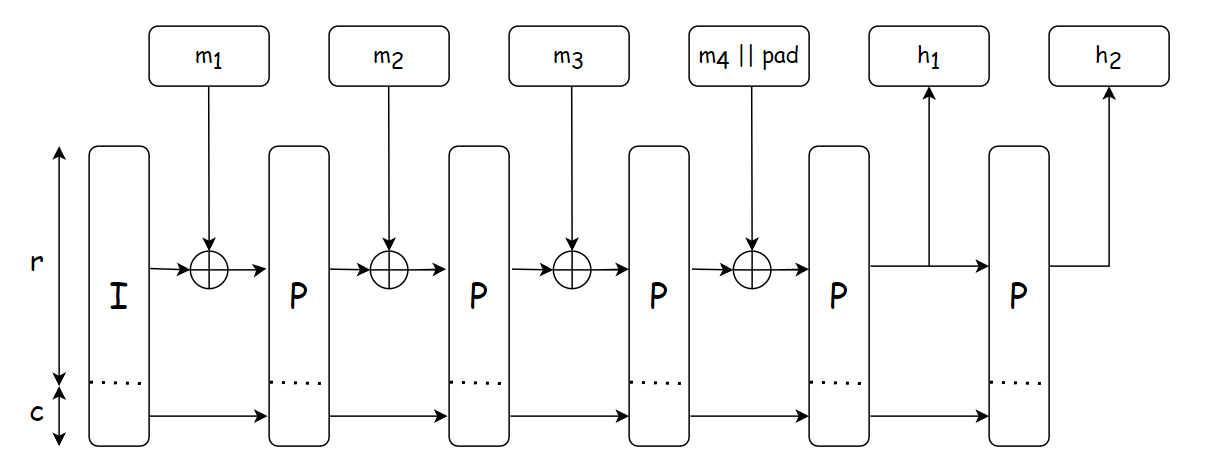

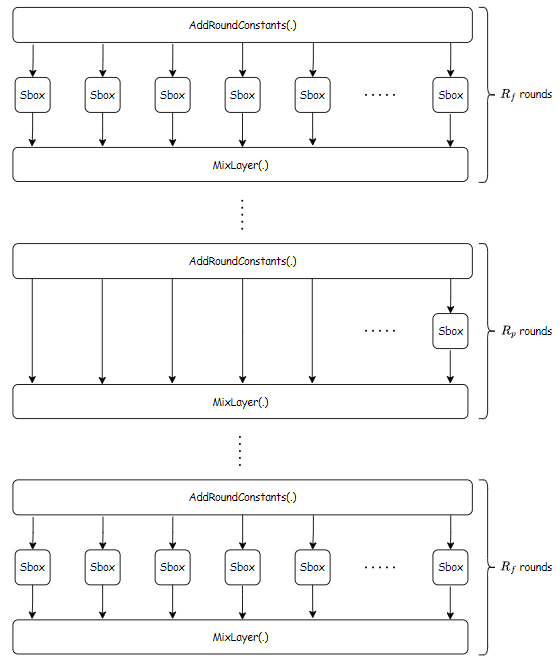

zk-SNARK is used as a succinct proof for on-chain and off-chain interoperability; it establishes cryptographic verification. We also use this advantage to perform layer-to-layer interactions. Our Verifiable Data Infrastructure supports ZKPs natively, for each blockchain we will use commitment schemes and ZKPs that are most compatible with a given platform. This approach allows a huge leap in performance adn compatibility.

┌──────────────────────┐

│ Execution Layer │

└──────────▲───────────┘

│

│Data & ZKP

Verifiable │

┌───────────────────┐ Sampling ┌──────────┴───────────┐

│ Real World Data ┼──────────► VDI* │

└───────────────────┘ └──────────┬───────────┘

│

│Commit

│

┌──────────▼───────────┐

│ Settlement Layer │

└──────────────────────┘

(*): Verifiable Data Infrastructure

Verifiable Data Pipeline

We not only support ZKPs natively, but also generate cryptographic proof of data integrity. We solve the most challenging problem where Real World Data isn’t provable to smart contracts and dApps. Verifiable Data Pipeline opens the door to the future of Provable Data.

┌────────────┐ ┌────────────┐

│ │ │ │

│ Verifiable │ Sampling │ Verifiable │

│ Sampling ├───────────────────►│ Processing │

│ │ │ │

└────────────┘ └──────┬─────┘

│

│

│ Store

│

│

┌──────▼─────┐

│ │

┌───────────┤ Immutable ◄────────────┐

│ │ Storage │ │

│ │ │ │

│ └────────────┘ │

│ Lookup │ Update data with ZKP

│ │

│ │

│ │

┌──────▼─────┐ ┌──────┴─────┐

│ │ │ │

│ Lookup │ │ Transform │

│ Prover │ │ Prover │

│ │ │ │

└──────┬─────┘ └──────▲─────┘

│ │

│ │

Read data and ZKP │ │ Update data & schema

│ ┌──────────────────────────────┐ │

│ │ │ │

└──► Execution Layer ├───┘

│ │

└──────────────────────────────┘

ZK-data-rollups

We all have witnessed ZK-rollups in action. Many projects are utilizing ZKPs to build ZK-rollups and succeed bundle thousands of transactions/executions in one single succinct proof that can be efficiently verified on-chain, we utilized the same property of ZK-rollups to apply on data.

┌───┬───────────────────┐ x

│ W │ Data transaction │ xxx

└───┴───────────────────┘ xxxxx

┌───┬───────────────────┐ xxxxx ┌───┬───┐

│ U │ Data transaction │ xxx │ W │ U │

└───┴───────────────────┘ ├───┼───┤

┌───┬───────────────────┐ xxx │ R │ D │

│ R │ Data transaction │ xxxxx └───┴───┘

└───┴───────────────────┘ xxxxx zk-SNARK

┌───┬───────────────────┐ xxx Proof

│ D │ Data transaction │ x

└───┴───────────────────┘

The Future of Web3

Orochi Network's Verifiable Data Infrastructure is a promising step towards a more secure, scalable, and user-friendly Web3. By leveraging the power of Zero-Knowledge Proofs, Verifiable Data Infrastructure offers solutions to some of the most pressing challenges facing the decentralized future of the internet. As Verifiable Data Infrastructure continues to evolve, it has the potential to be a game-changer for Web3, ushering in a new era of innovation and user adoption.

In essence, our suite of products, anchored by the innovative Verifiable Data Infrastructure, lays the groundwork for a future web built on secure, scalable, and user-friendly decentralized applications. By addressing the limitations of current dApps, Orochi Network has the potential to unlock the true potential of Web3, paving the way for a more decentralized and empowering online experience for everyone. The promise of Orochi Network has been recognized by leading organizations within the blockchain space. Orochi Network is a grantee of the Ethereum Foundation, Web3 Foundation, Mina Protocol, and Aleo. This recognition underscores the potential of our technology to shape the future of Web3.

Orochi ❤️ Open Source

All projects are open-sourced and public. We build everything for the common good.

- Our scientific paper that proposes Conditional Folding Scheme: RAMenPaSTA: Parallelizable Scalable Transparent Arguments of Knowledge for RAM Programs

- Our construction in Distributed ECVRF - Orand - A fast, publicly verifiable, scalable decentralized random number generator for blockchain-based applications

- Our zkDatabase - zkDatabase - Self-proving Database

- Our zkVM framework PoC - zkMemory - An universal memory prover in Zero-Knowledge Proof

- Our proposal to improve security of Smart Contracts - ERC-6366: Permission Token

- Our proposal to improve permission and role handling - ERC-6617: Bit Based Permission

Other Products of Orochi Network

built with ❤️ and 🦀

zkDatabase: Self-proving Database

zkDatabase inherits the technology from Verifiable Data Infrastructure and zkMemory, this branch of product intents to fill the gap between enterprise and ZK technology. It opens new possibilities for FinTech, Insurance, Gaming, etc. For the first time, off-chain data can be verified independently by any party. Let's check the use cases of zkDatabase:

FinTech

zkDatabase can be utilized to enhance the security and privacy of financial transactions by proving data integrity and preventing money laundering. This can be achieved through the use of Zero-Knowledge Proofs (ZKPs) where transactions are recorded with proof of their legitimacy without revealing sensitive user data. For instance, zkDatabase can manage customer transaction records, ensuring that each transaction's compliance with Anti-Money Laundering (AML) regulations is verifiable without exposing personal or financial details of the users. This setup allows financial institutions to demonstrate to regulators that they are adhering to AML standards while simultaneously preserving customer privacy, thus maintaining trust and confidentiality in financial dealings

IoT

Weak IoT devices often lack the processing power to implement robust security measures, but with zkDatabase, data can be stored in a centralized hub/server while proofs of data integrity are committed on-chain. This means sensitive information like sensor readings or device status can be verified for authenticity and integrity without exposing the actual data, thereby protecting these devices from unauthorized access or tampering while ensuring that privacy is maintained, even on low-power or resource-constrained IoT devices.

Healthcare

Zero-Knowledge Proofs can revolutionize data privacy by allowing medical records to be stored and managed in a way that ensures patient confidentiality. Using Zero-Knowledge Proofs, zkDatabase can enable healthcare providers to verify the legitimacy and integrity of medical data without exposing sensitive patient information. For instance, a patient's medical history or test results can be proven to be up-to-date and accurate for treatment or research purposes, but only the necessary details are shared, not the entire medical record. This enhances patient privacy, reduces the risk of data breaches, and ensures compliance with privacy regulations like HIPAA, all while facilitating secure data sharing among healthcare professionals.

AI/ML

zkDatabase will concentrate on delivering proofs of data integrity to complement the outcomes of Zero-Knowledge Machine Learning (zkML), thereby making the entire process verifiable. This synergy ensures that the data input into ML models remains confidential and unaltered, while the results can be proven accurate through Zero-Knowledge Proofs, enhancing trust and transparency in AI applications.

Insurance

zkDatabase uses Zero-Knowledge Proofs to prove the claim/judgement are based on accurate data without revealing personal details, enhancing trust and streamlining claims processing.

Web3 gaming

Players can prove their in-game achievements or asset ownership without revealing their strategy or personal data. Additionally, zkDatabase allows game logic to be verified by smart contracts, ensuring that game rules, outcomes, and fairness are transparently and securely checked on-chain. This setup reduces the chances of cheating, provides trust in game mechanics, and supports a seamless, privacy-respecting environment where players can engage in play-to-earn models with confidence in the system's integrity.

Identity

Users can prove aspects of their identity like age, nationality, or financial status to service providers without disclosing unnecessary personal details.

This document provides an in-depth exploration of the zkDatabase, covering its various components, functionalities, and the underlying mechanisms that drive its operations. Our aim is to offer a comprehensive understanding of how zkDatabase functions and operates within the Mina Blockchain ecosystem.

Specification

-

Accumulation: Delving into the accumulation process, this section explains how the Mina Blockchain efficiently processes numerous transactions simultaneously, ensuring quick and secure transaction verification.

-

B-Tree: This part demystifies the role of B-Trees in organizing extensive data on the Mina Blockchain. Learn about their contribution to effective data management and utilization, and how they maintain order and efficiency.

-

Composability: Explore the concept of composability within the Mina Blockchain, where different elements interlink seamlessly like parts of a well-coordinated mechanism, ensuring smooth operations.

-

Serialization: In the context of SnakyJS, serialization involves converting specific data types to BSON (Binary JSON) and vice versa. This process is crucial for efficient data storage and transmission within the SnakyJS framework.

-

Data Collection: Focus on the process of extracting and processing information from blockchain networks. It involves retrieving transaction details and interactions for analysis, auditing, and ensuring transparency.

-

Distributed Storage Engine: Shift your focus to the distributed storage engine, understanding the use of IPFS for secure and efficient data storage, ensuring data integrity and accessibility.

-

Merkle Tree: Finally, dive into the functionalities of Merkle Trees in maintaining the accuracy and integrity of transactions and data, ensuring they remain tamper-proof within the blockchain network.

Accumluation

The problem statement

Suppose we have a zkApp that updates its state with each user interaction. The updated state relies on both the previous state and the inputs from the interaction – a scenario likely common to many zkApps. The straightforward approach is to update the on-chain state during every interaction. However, to derive the new state, we must refer to the existing state. To validate that we're genuinely referencing the on-chain state, a precondition is set on the present state. Here's the catch: if multiple users unknowingly send their transactions within the same block, they'll establish the same precondition based on the current state, and concurrently attempt to update it. As a result, every transaction following the initial one becomes obsolete due to a stale precondition and gets declined.

Accumulation and Concurrency in Mina

Accumulation scheme

An accumulation scheme is a scheme that allows the prover to combine several proofs into a single proof, which can be verified more efficiently compared to verifying each proof separately. This scheme is critical in scaling blockchain systems and other similar systems, which involve numerous transactions and hence, multiple proofs.

Mina's Proof Systems - offers deep insights into the utilization and implementation of accumulation schemes.

Block production

In the Mina blockchain, transactions are grouped into blocks and added to the blockchain in a sequential manner. The blocks are created by block producers (similar to miners in other blockchain networks), and the block producers are responsible for processing the transactions in the blocks they produce.

Now, when multiple transactions are sent to the same smart contract, and possibly calling the same function within a short time frame, they would be processed one after the other, in the order they are included in the block by the block producer.

Mina Block Production - a comprehensive guide to understanding the nuances of block production within the Mina network.

Handling Concurrent Calls to the Same Function

In the context of simultaneous calls to the same function in a smart contract:

-

Atomicity: Each transaction is processed atomically. It will see a consistent state and produce a consistent state update.

-

Isolation: Transactions are isolated from each other until they are included in the block, at which point the state changes become visible to subsequent transactions.

-

Concurrency Issues: If two transactions are modifying the same piece of data, they will do so in the order determined by their position in the block, which prevents conflicts but can potentially lead to situations like front-running.

Accumulation in o1js

Actions, similar to events, are public data pieces shared with a zkApp transaction. What sets actions apart is their enhanced capability: they enable processing of past actions within a smart contract. This functionality is rooted in a commitment to the historical record of dispatched actions stored in every account, known as the actionState. This ensures verification that the processed actions align with those dispatched to the identical smart contract.

Practical Accumulation: Managing Accumulation in Code

A code-based approach to handle accumulation using thresholds:

@state root = State<Field>();

@state actionsHash = State<Field>();

@state userCount = State<Field>();

reducer = Reducer({ actionType: MerkleUpdate})

@method

deposit(user: PublicKey, amount: Field, witness: Witness) {

this.userCount.set(this.userCount.get() + 1);

this.root.assertEquals(this.root.get());

this.root.assertEquals(witness.computeRoot(amount));

user.send(this.account, amount);

this.reducer.dispatch({ witness: witness, newLeaf: amount })

if (this.userCount.get() >= TRANSACTION_THRESHOLD) {

// if we reach a certain treshold, we process all accumulated data

let root = this.root.get();

let actionsHash = this.actionsHash.get();

let { state: newRoot, actionsHash: newActionsHash } = this.reducer.reduce(

this.reducer.getActions(actionsHash),

MerkleUpdate,

(state: Field, action: MerkleUpdate) => {

return action.witness.computeRoot(action.newLeaf);

}

);

this.root.set(newRoot);

this.actionsHash.set(newActionsHash);

this.userCount.set(0);

}

}

API

reducer = Reducer({actionType: MyAction})

reducer.dispatch(action: Field)

-

Description: Dispatches an Action. Similar to normal Events, Actions can be stored by archive nodes and later reduced within a SmartContract method to change the state of the contract accordingly

-

Parameters:

- action (MyAction): Action to be stored.

reducer.getActions(currentActionState: Field, endActionState:: Field)

-

Description: Fetches the list of previously emitted Actions. Supposed to be used in the circuit.

-

Parameters:

- currentActionState (Field): The hash of already processed actions.

- endActionState (Field): Calculated state of non-processed actions. Can be either

account.actionStateor be calculated withActions.updateSequenceState.

-

Returns: Returns the dispatched actions within the designated range.

reducer.fetchActions(currentActionState: Field, endActionState:: Field)

-

Description: Fetches the list of previously emitted Actions. Supposed to be used out of circuit.

-

Parameters:

- currentActionState (Field): The hash of already processed actions.

- endActionState (Field): Calculated state of non-processed actions. Can be either

account.actionStateor be calculated withActions.updateSequenceState.

-

Returns: Returns the dispatched actions within the designated range.

reducer.reduce(currentActionState: Field, endActionState:: Field)

-

Description: Reduces a list of Actions, similar to

Array.reduce(). -

Parameters:

- actions (Action[][]): A list of sequences of pending actions. The maximum number of columns in a row is capped at 5. (P.S. A row is populated when multiple dispatch calls are made within a single @method.)

- stateType (Provable

) : Specifies the type of the state - initial: An object comprising:

- state (State): The current state.

- actionState (Field): A pointer to actions in the historical record.

- maxTransactionsWithActions (optional, number): Defines the upper limit on the number of actions to process simultaneously. The default value is 32. This constraint exists because o1js cannot handle dynamic lists of variable sizes. The max value is limited by the circuit size.

- skipActionStatePrecondition (optional, boolean): Skip the precondition assertion on the account. Each account has

account.actionStatewhich is the hash of all dispatched items. So, after reducing we check if all processed is equal to the all reduced once.

-

Returns:

- state (State): Represents the updated state of the zk app, e.g., the concluding root.

- actionState (Field): Indicates the position of the state. It is essentially the hash of the actions that have been processed.

Manual calculation of the pointer to the actions history

let actionHashs = AccountUpdate.Actions.hash(pendingActions);

let newState = AccountUpdate.Actions.updateSequenceState(currentActionState, actionHashs);

Commutative

Actions are processed without a set order. To avoid race conditions in the zkApp, actions should be interchangeable against any state. For any two actions, a1 and a2, with a state s, the result of s * a1 * a2 should be the same as s * a2 * a1.

Action Location

All actions are stored in archive nodes.

Resources

- https://github.com/o1-labs/o1js/issues/265

- https://github.com/o1-labs/snarkyjs/issues/659

- https://docs.minaprotocol.com/zkapps/o1js/actions-and-reducer

B-tree

A B-tree is a type of self-balancing search tree that maintains sorted data in a manner that allows for efficient insertion, deletion, and search operations. It is commonly used in database and file systems where large amounts of data need to be stored and retrieved efficiently.

Features

The main features of a B-tree are:

- All leaves are at the same level, making the tree perfectly balanced.

- Each node in the B-tree contains a certain number of keys and pointers. The keys act as separation values which divide its subtrees. When we insert a new key into a B-tree, and if the node we want to insert into is already full, we perform a split operation. Similarly, deletion might cause a node to be less than half full, violating the properties of the B-tree. In this case, we perform a merge operation.

- For a B-tree of order m (where m is a positive integer), every node in the tree contains a maximum of m children and a minimum of ⌈m/2⌉ children (except for the root which can have fewer children).

- The keys within a node are ordered.

- The subtree between two keys k1 and k2 consists of all keys that are greater than or equal to k1 and less than k2.

Operations

- Insertion

- Deletion

- Search

- Split and merge

Split and Merge Operations

When we insert a new key into a B-tree, it's possible that the node we want to insert into is already full. In this case, we have to split the node. Here is a high-level overview of how the split operation works:

- The node to be split is full and contains m-1 keys, where m is the order of the B-tree.

- A new node is created, and approximately half of the keys from the original node are moved to this new node.

- A key from the original node is moved up into the node's parent to act as a separator between the original node and the new node. If the original node was the root and has no parent, a new root is created.

- The new node and the original node are now siblings.

The split operation maintains the property that all leaf nodes are at the same level since all splits start at the leaf level and work their way up the tree.

Conversely, deletion from a B-tree might cause a node to be less than half full, violating the properties of the B-tree. In such cases, we perform a merge operation. Here's a simplified view of the merge process:

- Two sibling nodes, each with less than ⌈m/2⌉ keys, are combined into a single node.

- A key from the parent node, which separates these two nodes, is moved down into the merged node.

- If the parent node becomes less than half full as a result, it may also be merged with a sibling and so on.

Time complexity

Each operation runs in logarithmic time - O(log n), making B-trees useful for systems that read and write large blocks of data, such as databases and filesystems.

Indexes

Database indexing is a data structure technique to efficiently retrieve records from the database files based on some attributes on which the indexing has been done. Indexing in databases works similarly to an index in a book.

Indexes are used to quickly locate data without having to search every row in a database table every time a database table is accessed. Indexes can be created using one or more columns of a database table, providing the basis for both rapid random lookups and efficient access of ordered records.

The two main types of database indexes are:

-

Clustered Index: A clustered index determines the physical order of data in a table. Because the physical order of data in a table and the logical (index) order are the same, there can only be one clustered index per table.

-

Non-clustered Index: A non-clustered index doesn’t sort the physical data inside the table. Instead, it creates a separate object within a table that contains the column(s) included in the index. The non-clustered index contains the column(s) values and the address of the record that the column(s) value corresponds to.

Difference between Clustered and Non-Clustered Indexes

In a clustered index, the leaf nodes of the B-tree structure contain the actual data rows. This is why there can only be one clustered index per table because it actually determines the physical order of data in the table.

In a non-clustered index, the leaf nodes contain a pointer or reference to the data rows, not the data itself. The data can be stored anywhere else in the database, and this pointer helps to quickly locate the actual data when needed.

Additional considerations when choosing between a Clustered and Non-Clustered Index include the order of data, frequency of updates, width of the table, and the need for multiple indexes. For instance, if the data in a table is accessed sequentially, a clustered index can be beneficial. If a table requires access via multiple different key columns, non-clustered indexes could be a good solution as you can create multiple non-clustered indexes on a single table.

| S.No | Clustered Indexes | Non-Clustered Indexes | |

|---|---|---|---|

| 1 | Data sorting | Defines the order or sorts the table or arranges the data by alphabetical order just like a dictionary. | Collects the data at one place and records at another place. |

| 2 | Speed | Generally faster for retrieving data in the sorted order or range of values. | Generally slower than the clustered index. |

| 3 | Memory usage | Demands less memory to execute the operation. | Demands more memory to execute the operations. |

| 4 | Storage | Permits you to save data sheets in the leaf nodes of the index. | Does not save data sheets in the leaf nodes of the index. |

| 5 | Number per table | A single table can consist of a sole clustered index. | A table can consist of multiple non-clustered indexes. |

| 6 | Data storage | Has the natural ability to store data on the disk. | Does not have the natural ability to store data on the disk. |

Resources: Difference between Clustered and Non-Clustered Index

Choosing Between Clustered and Non-Clustered Indexes

The choice between a clustered index and a non-clustered index often depends on the specific use case, the nature of the data, and the types of queries the database will be serving

- Order of Data: If the data in a table is accessed sequentially, then a clustered index is typically the better choice because it physically stores the row data in sorted order. This can significantly speed up range queries and ordered access.

- Frequent Updates: If the indexed columns are updated frequently, non-clustered indexes can be a better choice. This is because any change to the data value of a clustered index requires physically rearranging the rows in the database, which can be an expensive operation.

- Wide Tables: In wide tables, where each row has a lot of data, non-clustered indexes can be beneficial. This is because non-clustered indexes only store the indexed columns and a pointer to the rest of the data, reducing the amount of data that needs to be read from disk for each query.

- Multiple Indexes: If a table needs to be accessed by multiple different key columns, non-clustered indexe can be a good solution because you can create multiple non-clustered indexes on a single table. Each non-clustered index will be optimized for access by its specific key column(s).

Clustered Indexes:

- Primary Key: If a column is a unique identifier for rows in a table (like an ID), it should typically have a clustered index. The primary key of a table is a good candidate for a clustered index.

- Range Queries: Clustered indexes are beneficial for range queries that return a large range of ordered data, and queries where you expect to retrieve the data sorted by the indexed columns. The database can read the data off the disk in one continuous disk scan.

- Frequently Accessed Tables: If a table is frequently accessed by other database operations, like a foreign key relationship, a clustered index can help speed these operations.

Resources: (Clustered Index)[https://vladmihalcea.com/clustered-index/]

Non-Clustered Indexes:

- Non-Unique Columns: If a column is not unique or has a high level of duplication, a non-clustered index can be a better choice.

- Specific Columns: If only specific columns are frequently accessed, a non-clustered index can provide quicker lookups since it doesn’t need to go through the entire row.

- Covering Indexes: For queries that can be covered by an index, a non-clustered index that includes all the necessary data can be highly efficient.

- Frequently Updated or Inserted Tables: If a table's data is frequently updated or if new data is often inserted, using non-clustered indexes can be beneficial as they can be less resource-intensive to maintain.

Multiple different keys

If you need to optimize access based on multiple different keys, it is more common to create multiple B-trees (i.e., multiple indexes), each with its own key. This way, you maintain the efficient logarithmic time complexity for searching, inserting, and deleting nodes in each tree.

Storing data raws

A concise overview of data persistence:

- When we insert records into a table with a clustered index (typically created on the primary key), the database management system stores the records directly within the leaf nodes of the B-tree structure for this index. The records are sorted in the B-tree based on the values of the primary key.

- We can create additional non-clustered indexes on the same table. These non-clustered indexes also use a B-tree structure, but they work slightly differently. Instead of storing the full record within the leaf nodes, they store the index key (which could be any column or combination of columns, not necessarily the primary key) and a reference (like a pointer) to the actual record in the clustered index.

- When we perform a lookup using a non-clustered index, the database management system first locates the index key in the B-tree of the non-clustered index, finds the reference to the actual record, then uses that reference to retrieve the record from the B-tree of the clustered index.

Composability

Composability is the ability for different decentralized applications (dApps) or smart contracts to interact with each other in a seamless manner.

zkApp composability refers to the ability to call zkApp methods from other zkApp methods. It uses the callData field on the zkApp party to connect the result of the called zkApp to the circuit/proof of the caller zkApp.

CallData

CallData is an opaque data for communicating between zkApps. `callData`` is a specific data structure generated during the execution of a zkApp method, and it's crucial in establishing a connection between the caller and the callee during a zkApp call

Composition of CallData

The callData is formulated within the callee's circuit, and it is composed of a hash created from a collection of elements:

- Inputs: The arguments that are being used to call a particular method in the smart contract, represented as an array of field elements.

- Outputs: The return values generated by the method, also represented as an array of field elements.

- Method Index: A numerical identifier for the method that is being called within the smart contract.

- Blinding Value: A random value that is known to both the caller and callee circuits at the time of proof generation, used to maintain the privacy of the inputs and outputs.

Working

-

The callee smart contract first computes the callData hash with the aforementioned elements and stores it in its own callData field.

-

When the caller initiates a call to the callee zkApp, it witnesses the callee's party along with the hash of the callee's children and the method's return value.

-

Subsequently, within the caller's circuit, the same hash operation is performed as in the callee circuit, and it's compared against the callData acquired from the callee to ensure that the call was executed with the exact inputs and garnered the specified outputs.

-

This callData acts as a connecting link allowing the caller zkApp to make authenticated calls to another zkApp (callee) while maintaining the privacy and integrity of the transaction.

Method Index

The methods are stored in a fixed order, and that order is also baked into the verification key when compiling. Order depends on the order that the @method decorators are called in, but that's an implementation detail

AccountUpdate

An AccountUpdate in the Mina Protocol signifies a set of alterations and events related to a single account during a transaction.

Each zkApp transaction constructed by o1js is composed of one or more AccountUpdates, arranged in a tree-like structure. The execution of this tree adheres to a pre-order traversal pattern; initiating with the primary account, followed by the subsequent left and right branches respectively.

Each AccountUpdate consists of components. Essentially, it can be seen as having a core and a set of metadata surrounding it.

- Core Component:

- Updates: This is the nucleus of an AccountUpdate, embodying the critical changes brought about by the transaction, including shifts in the zkApp state, alterations in permissions, and adjustments to the verification key linked to the account.

- Metadata Components:

-

PublicKey: The unique identifier for the account being updated, akin to its address.

-

TokenId: Represents the custom token involved, defaulting to the MINA TokenId (1). It works in tandem with the PublicKey to uniquely identify an account on the Mina Protocol.

-

Preconditions: Specifies the essential conditions or assertions that need to be satisfied for the successful application of the AccountUpdate. These are usually framed through a method in the o1js library.

-

BalanceChange: Captures any fluctuations in the account's balance as a consequence of the transaction.

-

Authorization: Dictates the mode of authorizing the zkApp, which could be either a proof (aligned with the verification key on the account) or a signature.

-

MayUseToken: Signifies whether the zkApp possesses the authority to interact or manipulate its associated token.

-

Layout: Allows for making assertions regarding the structural makeup of an AccountUpdate, guaranteeing its compliance and integrity.

Return types

Only types built out of Field are valid return types. This includes snarkyjs primitive types and custom CircuitValues.

Example

The CallerContract class is invoking a method in the CalleeContract class. During this interaction, two separate AccountUpdates are created to record the changes and events that occurred during the transaction - one for the parent (CallerContract) and one for the child (CalleeContract).

class CallerContract extends SmartContract {

@method calledMethod(arg: UInt64): Bool {

let calledContract = new CalleeContract(address);

let result = calledContract.calledMethod(arg);

}

}

class CalleeContract extends SmartContract {

@method calledMethod(arg: UInt64): Bool {

// ...

}

}

-

Once the child AccountUpdate is created, it is then verified in the parent's circuit, with assertions to validate that the right method was called with the correct parameters, and produced the expected outcome.

-

This process also involves verifying that the right zkApp was called by checking the publicKey and tokenId, as indicated in the child AccountUpdate.

-

After both AccountUpdates are verified, they are compiled into a tree-like structure, representing a cohesive record of the transaction.

-

This hierarchical structure is then submitted, effectively finalizing the transaction and documenting a secure, verified record of the entire interaction between the two contracts.

These AccountUpdates work in tandem to create a comprehensive, secure, and verified record of the transaction, safeguarding the integrity of the process and ensuring transparency and accountability.

Composability: Second way

Another approach to achieve composability is by chaining method calls from various smart contracts. This potentially could give use certain flexibility. However, the question is how can we ensure or enhance the security of such an approach?

class OneZkApp extends SmartContract {

@method callOne(): Field {

//

}

}

class SecondZkApp extends SmartContract {

@method callSecond(field: Field) {

//

}

}

Mina.transaction(feePayer, () => {

const result = oneZkApp.callOne();

secondZkApp.callSecond(result);

});

Resources

- https://github.com/o1-labs/snarkyjs/issues/303

Serialization

Serialization is the process of converting an object or data structure into a format that can be easily stored, transmitted, and reconstructed later. It is often used to save the state of a program, send data over a network, or store complex data structures, such as objects, in a human-readable or compact binary format. The opposite process, called deserialization, converts the stored format back into an object or data structure.

Data Type

SnaryJS supported types

- Built-in types

- Field

- Bool

- UInt32

- UInt64

- PublicKey

- PrivateKey

- Signature

- Group

- Scalar

- CircuitString

- Character

- Custom types

- Struct *

- Trees

- MerkleTree

- MerkleMap

Bson supported types

- Double

- String

- Object

- Array

- Binary data

- Undefined

- ObjectId

- Boolean

- Date

- Null

- Regular Expression

- DBPointer

- JavaScript

- Symbol

- 32-bit integer

- Timestamp

- 64-bit integer

- Decimal128

- Min key

- Max key

Serialization/Deserialization

The provided code snippet demonstrates how to convert a zk-snark data type into a BSON-supported format by first converting the value into a Uint8Array and then serializing it using BSON.

const value = UInt64.from(12342);

const bytes: Uint8Array = Encoding.Bijective.Fp.toBytes(value.toFields());

const bson = BSON.serialize({ bytes });

This code snippet demonstrates the process of converting BSON data back into a zk-SNARK data type. This is done by first deserializing the BSON data into a JavaScript object, then converting the Binary data into a Uint8Array, and finally using a built-in decoding method to reconstruct the original value from the byte array.

const deserializedBson = BSON.deserialize(bson);

const convertedResult = new Uint8Array(deserializedBson.bytes.buffer);

const initialField = Encoding.Bijective.Fp.fromBytes(convertedResult);

Serializing Arbitrary Data into Field Elements

When serializing arbitrary data into field elements, it's important to note that field elements can hold a maximum of 254 arbitrary bits (not 255) due to the largest possible field element lying between 2^254 and 2^255.

You can utilize the Encoding.bytesToFields method, which efficiently packs 31 bytes per field element for serialization.

HELP We need to clarify which kind of data type will be supported.

Data Collection

Data collection occures by requesting events from the Mina blockchain, which are fired from SmartContract.

Smart Contract

Define names and types of your events:

events = {

"arbitrary-event-key": Field,

};

In order to send data to the blockchain with use the following method:

this.emitEvent("arbitrary-event-key", data);

Off-chain

The most convenient way to pull events off the blockchain is by making graphql request:

Request

query getEvents($zkAppAddress: String!) {

zkapps(

query: {

zkappCommand: { accountUpdates: { body: { publicKey: $zkAppAddress } } }

canonical: true

failureReason_exists: false

}

sortBy: BLOCKHEIGHT_DESC

limit: 1000

) {

hash

dateTime

blockHeight

zkappCommand {

accountUpdates {

body {

events

publicKey

}

}

}

}

}

The response depends on the state of the smart contract, but it will be something like this:

Response

{

"data": {

"zkapps": [

{

"blockHeight": 17459,

"dateTime": "2023-02-21T13:15:01Z",

"hash": "CkpZ3ZXdPT9RqQZnmFNodB3HFPvVwz5VsTSkAcBANQjDZwp8iLtaU",

"zkappCommand": {

"accountUpdates": [

{

"body": {

"events": ["1,0"],

"publicKey": "B62qkzUATuPpDcqJ7W8pq381ihswvJ2HdFbE64GK2jP1xkqYUnmeuVA"

}

}

]

}

},

{

"blockHeight": 17458,

"dateTime": "2023-02-21T13:09:01Z",

"hash": "CkpaEP2EUvCdm7hT3cKe5S7CCusKWL2JgnJMg1KXqqmK5J8fVNYtp",

"zkappCommand": {

"accountUpdates": [

{

"body": {

"events": [],

"publicKey": "B62qkzUATuPpDcqJ7W8pq381ihswvJ2HdFbE64GK2jP1xkqYUnmeuVA"

}

}

]

}

},

{

"blockHeight": 17455,

"dateTime": "2023-02-21T12:48:01Z",

"hash": "CkpZePsTYryXnRNsBZyk12GMsdT8ZtDuzW5rdaBFKfJJ73mpJbeaT",

"zkappCommand": {

"accountUpdates": [

{

"body": {

"events": ["13,12"],

"publicKey": "B62qkzUATuPpDcqJ7W8pq381ihswvJ2HdFbE64GK2jP1xkqYUnmeuVA"

}

}

]

}

}

]

}

}

Events

It is possible to send up to 16 fields in events in a single transaction, and each field can be up to 255 bits.

Distributed Storage Engine

This chapter provides a comprehensive insight into the IPFS (InterPlanetary File System) and its components, explaining how data is replicated and retrieved in the network using unique identifiers like PeerID and CID. It dives deep into concepts like IPNS, which provides a permanent pointer to mutable data, and Merkle DAG, a data structure essential for data storage and retrieval in IPFS. IPFS.

Next we describe the functionality and implementation of a Storage Engine, particularly focusing on the IPFS Storage Engine. Storage Engine.

IPFS

IPFS is a distributed protocol that allow you to replicate data among network, you can put a data to IPFS and get those data back as long as it wasn't run out of liveness. Data will be stored as blocks and each block will be identified by its digest.

PeerID

PeerID is a unique identifier of a node in the network. It's a hash of public key of the node. Lip2p2 keypair is handle by its keychain. You can get the PeerID by:

const libp2p = await createLibp2p({});

libp2p.peerId.toString();

CID

CID is a unique fingerprint of data you can access the data as long as you know the exactly CID. The CID was calculated by hash function but it isn't data's digest. Instead the CID was calculated by digests of blocks of data.

Combining that digest with codec information about the block using multiformats:

- Multihash for information on the algorithm used to hash the data.

- Multicodec for information on how to interpret the hashed data after it has been fetched.

- Multibase for information on how the hashed data is encoded. Multibase is only used in the string representation of the CID.

In our implementation we use CID v1 and use SHA256 + base58. I supposed that poseidon could be better in the long term so we need to make a poseidon proposal to multihash.

IPNS

As we know from above, each DAG node is immutable. In the reality, we want to keep the pointer to the data immutable. IPNS will solve this by provide a permanently pointer (in fact it's a hash of public key).

Merkle DAG

A Merkle DAG is a DAG where each node has an identifier, and this is the result of hashing the node's contents — any opaque payload carried by the node and the list of identifiers of its children — using a cryptographic hash function like SHA256. This brings some important considerations.

Our data will be stored in sub-merkle DAG. Every time we alter a leaf, it's also change the sub-merkle DAG node and it's required to recompute the CID, this will impact our implementation since we need a metadata file to keep track on CIDs and its children.

We can perform a lookup on a merkle DAG by using the CID of the root node. We can also perform a lookup on a sub-merkle DAG by using the CID of the root node of the sub-merkle DAG. DAG traversal is a recursive process that starts at the root node and ends when the desired node is found. This process is cheap and fast, since it only requires the node identifier.

Javascript IPFS

js-ipfs paves the way for the Browser implementation of the IPFS protocol. Written entirely in JavaScript, it runs in a Browser, a Service Worker, a Web Extension and Node.js, opening the door to a world of possibilities.

We switch to Helia due to the js-ipfs is discontinued.

libp2p

LibP2p provide building blocks to build p2p application, it handled all p2p network related along side with its modules. It's flexible to use and develop with libp2p. To config and work with libp2p you need to define:

- Transport:

- TCP: TCP transport module help you to manage connection between nodes natively. TCP handles connect at transport layer (layer 4) that's why it's more efficient to maintain connection. But it's only work for

Node.jsrun-time. - WebSockets: WebSocket in contrast to TCP, it's work on application layer (layer 7) that's why it's less efficient to maintain connection. But it's work for both

Node.jsandBrowser.

- TCP: TCP transport module help you to manage connection between nodes natively. TCP handles connect at transport layer (layer 4) that's why it's more efficient to maintain connection. But it's only work for

- Encryption: noise, we don't have any option since TLS didn't have any implement for JS.

- Multiplexer:

- mplex

mplexis a simple stream multiplexer that was designed in the early days of libp2p. It is a simple protocol that does not provide many features offered by other stream multiplexers. Notably,mplexdoes not provide flow control, a feature which is now considered critical for a stream multiplexer.mplexruns over a reliable, ordered pipe between two peers, such as a TCP connection. Peers can open, write to, close, and reset a stream. mplex uses a message-based framing layer like yamux, enabling it to multiplex different data streams, including stream-oriented data and other types of messages. - yamux. Yamux (Yet another Multiplexer) is a powerful stream multiplexer used in libp2p. It was initially developed by Hashicorp for Go, and is now implemented in Rust, JavaScript and other languages. enables multiple parallel streams on a single TCP connection. The design was inspired by SPDY (which later became the basis for HTTP/2), however it is not compatible with it. One of the key features of Yamux is its support for flow control through backpressure. This mechanism helps to prevent data from being sent faster than it can be processed. It allows the receiver to specify an offset to which the sender can send data, which increases as the receiver processes the data. This helps prevent the sender from overwhelming the receiver, especially when the receiver has limited resources or needs to process complex data. Note: Yamux should be used over mplex in libp2p, as mplex doesn’t provide a mechanism to apply backpressure on the stream level.

- mplex

- Node discovery: KAD DHT The Kademlia Distributed Hash Table (DHT), or Kad-DHT, is a distributed hash table that is designed for P2P networks. Kad-DHT in libp2p is a subsystem based on the Kademlia whitepaper. Kad-DHT offers a way to find nodes and data on the network by using a routing table that organizes peers based on how similar their keys are.

Note: KAD DHT boostrap didn't work as expected that's why you would see I connect the bootstrap nodes directly in the construction.

const nodeP2p = await createLibp2p(config);

// Manual patch for node bootstrap

const addresses = [

"/dnsaddr/bootstrap.libp2p.io/p2p/QmNnooDu7bfjPFoTZYxMNLWUQJyrVwtbZg5gBMjTezGAJN",

"/dnsaddr/bootstrap.libp2p.io/p2p/QmQCU2EcMqAqQPR2i9bChDtGNJchTbq5TbXJJ16u19uLTa",

"/dnsaddr/bootstrap.libp2p.io/p2p/QmbLHAnMoJPWSCR5Zhtx6BHJX9KiKNN6tpvbUcqanj75Nb",

"/dnsaddr/bootstrap.libp2p.io/p2p/QmcZf59bWwK5XFi76CZX8cbJ4BhTzzA3gU1ZjYZcYW3dwt",

].map((e) => multiaddr(e));

for (let i = 0; i < addresses.length; i += 1) {

await nodeP2p.dial(addresses[i]);

}

await nodeP2p.start();

Helia

Helia is an new project that handle ipfs in modular manner. You can construct a new instance of Helia on top of libp2p.

return createHelia({

blockstore: new FsBlockstore("./local-storage"),

libp2p,

});

By passing libp2p instance to Helia, it's highly configurable.

UnixFS

To handle file I/O, we used UnixFS. It can be constructed in the same way that we did with Helia but it will take a Helia instance instead of libp2p.

const fs = unixfs(heliaNode);

let text = "";

const decoder = new TextDecoder();

let testCID = CID.parse("QmdASJKc1koDd9YczZwAbYWzUKbJU73g6YcxCnDzgxWtp3");

if (testCID) {

console.log("Read:", testCID);

for await (const chunk of fs.cat(testCID)) {

text += decoder.decode(chunk, {

stream: true,

});

}

console.log(text);

}

After do research in libp2p and ipfs we introduce StorageEngineIPFS that handle ipfs I/O. The detail is given in specs. In our implementation, we used datastore-fs and blockstore-fs to persist changes.

Storage Engine

Storage Engine help us to handle file storage and local catching process, storage engine is also help to index files for further accession.

IPFS Storage Engine

IPFS Storage Engine is a distributed storage engine based on IPFS. The StorageEngineIPFS ins an implementation of IFileSystem and IFileIndex that handle all I/O operations and indexing.

/**

* An interface of file engine, depend on the environment

* file engine could be different

*/

export interface IFileSystem<S, T, R> {

writeBytes(_data: R): Promise<T>;

write(_filename: S, _data: R): Promise<T>;

read(_filename: S): Promise<R>;

remove(_filename: S): Promise<boolean>;

}

/**

* Method that performing index and lookup file

*/

export interface IFileIndex<S, T, R> {

publish(_contentID: T): Promise<R>;

republish(): void;

resolve(_peerID?: S): Promise<T>;

}

/**

* IPFS file system

*/

export type TIPFSFileSystem = IFileSystem<string, CID, Uint8Array>;

/**

* IPFS file index

*/

export type TIPFSFileIndex = IFileIndex<PeerId, CID, IPNSEntry>;

The relationship between StorageEngineIPFS and other classes/interfaces is shown below:

classDiagram LibP2pNode -- StorageEngineIPFS Helia-- StorageEngineIPFS UnixFS -- StorageEngineIPFS IPNS -- StorageEngineIPFS IFileSystem <|-- StorageEngineIPFS IFileIndex <|-- StorageEngineIPFS IFileSystem : writeByte(data Uint8Array) CID IFileSystem : write(filename string, data Uint8Array) CID IFileSystem : read(filename string) Uint8Array IFileSystem : remove(filename string) boolean IFileIndex : publish(contentID CID) IPNSEntry IFileIndex : republish() void IFileIndex : resolve(peerID PeerId) CID StorageEngineIPFS : static getInstance(basePath, config)

In our implementation, we used datastore-fs and blockstore-fs to persist changes with local file, for now browser is lack of performance to handle connections and I/O. So the best possible solution is provide a local node that handle all I/O and connection.

Usage of IPFS Storage Engine

The database will be cached at local to make sure that the record are there event it's out live of liveness on IPFS network. To start an instance of StorageEngineIPFS we need to provide a basePath and config (we ignored config in this example):

const storageIPFS = await StorageEngineIPFS.getInstance(

"/Users/chiro/GitHub/zkDatabase/zkdb/data"

);

The basePath is the path to the local cache folder, the folder will be created if it's not exist. The config is the configuration of IPFS node, we will use default config if it's not provided. After we get the instance of StorageEngineIPFS we could use it to perform I/O operations.

// Switch to collection `test`

newInstance.use("test");

// Write a document to current collection

await newInstance.writeBSON({ something: "stupid" });

// Read BSON data from ipfs

console.log(

BSON.deserialize(

await newInstance.read(

"bbkscciq5an6kqbwixefbpnftvo34pi2jem3e3rjppf3hai2gyifa"

)

)

);

The process to update collection metadata and master metadata will be described in the following sections.

File mutability

Since a DAG nodes are immutable but we unable to update the CID every time. So IPNS was used, IPNS create a record that mapped a CID to a PeerID hence the PeerID is unchanged, so as long as we keep the IPNSEntry update other people could get the CID of the zkDatabase.

Metadata

The medata file is holding a mapping of data's poseidon hash to its CID that allowed us to retrieve the data from ipfs. It's also use to reconstruct the merkle tree. Metada is stored on IPFS and we also make a copy at local file system.

IPFS Storage Engine folder structure

The structure of data folder is shown below:

├── helia

├── nodedata

│ ├── info

│ ├── peers

│ └── pkcs8

└── storage

└── default

The helia folder is the folder that hold the Helia node's information, the nodedata folder is the folder that hold the IPFS node's information inclued node identity, peers and addition info. The storage folder is the folder that hold the data of our zkDatabase, all children folder of storage is the name of the collection, in this case we only have one collection called default.

Metadata structure

There is a metadata file at the root of storage folder that contains all the index records for children's metadata, we called it master metadata.

{

"default": "bafkreibbdesmz6d4fp2h24d6gebefzfl2i4fpxseiqe75xmt4fvwblfehu"

}

The default is the name of the collection and the bafkreibbdesmz6d4fp2h24d6gebefzfl2i4fpxseiqe75xmt4fvwblfehu is the CID of the collection's metadata file. We use the IPNS to point current node PeerID to the CID of the master metadata file by which we could retrieve the list of CID of the collection's metadata file.

There are also a metadata file at each collection folder, we called it collection metadata.

{

"bbkscciq5an6kqbwixefbpnftvo34pi2jem3e3rjppf3hai2gyifa": "bafkreifnz52i6ssyjqsbeogetwhgiabsjnztuuy6mshke5uemid33dsqny"

}

You might aware that the key of the collection metadata is the poseidon hash of the database document in base32 encoding, and the value is the CID of the document. The collection metadata is used to retrieve the CID of the document by its poseidon hash. There is also a file in the collection folder with the name bbkscciq5an6kqbwixefbpnftvo34pi2jem3e3rjppf3hai2gyifa.zkdb contains the content of the document which was encoded by BSON.

BSON Document

BSON or Binnary JSON is a data structure that we used to encode and decode document. The document will be categorized into collections.

Merkle Tree

To keep our merkle tree verification succinct, efficient and friendly with SnarkyJS. A poseidon merkle tree will be used to prove the immutability of the data. The Sparse Merkle Tree is utilized as an adaptation of the conventional Merkle Tree.

Sparse Merkle Tree (SMT)

A Sparse Merkle Tree (SMT) is a variant of a standard Merkle tree that is optimized for scenarios where the data set is very large, but only a small portion of it is populated with values. You could refer to the following article to learn more: What’s a Sparse Merkle Tree?.

Advantages of SMT

Sparse Merkle Trees (SMTs) offer several benefits:

- Efficiency: They allow efficient storage of large, sparse datasets with minimal memory overhead.

- Security: Sparse Merkle Trees (SMTs) share the tamper-proof nature of traditional Merkle Trees, ensuring cryptographic data integrity. However, they also share the same vulnerabilities, such as potential false proofs through hash collisions or second preimage attacks. To mitigate these risks, a strong, collision-resistant hash function is crucial. Additionally, cryptographic commitments to the SMT root can enhance security. With proper implementation, SMTs offer efficient and secure data storage for sparse datasets.

- Proof Size: The proof size for SMTs is consistent, regardless of the tree's size, making them optimal for scenarios where frequent proofs are required.

- Flexible Updating: They support efficient updates and insertions even in massive datasets.

Ways to store Merkle Tree on IPFS

Here are different ways you could store a Merkle Tree on IPFS:

-

JSON Serialization: One of the simplest ways to store a Merkle Tree in IPFS is to convert the Merkle Tree to a JSON structure and then save that to IPFS. This is a straightforward method but can be inefficient for large trees, as the entire tree needs to be retrieved even if you're only interested in certain parts of it.

-

IPLD (InterPlanetary Linked Data): IPLD is a data model and set of coding conventions for linking distributed content on IPFS. By using IPLD, you can create links between any piece of data stored within IPFS. While it involves the concept of DAGs, it provides a more flexible and efficient way to store and retrieve Merkle Trees on IPFS.

-

BSON Serialization: BSON, or Binary JSON, extends the popular JSON model to include additional data types such as Date and raw binary data, and allows for a level of efficiency not present in standard JSON. This is because BSON data is a binary representation of JSON-like documents and BSON documents may have elements that are BSON arrays. Storing a Merkle Tree in IPFS using BSON serialization would provide a more space-efficient and potentially faster method for data retrieval compared to JSON, especially for large trees with binary data. Like with JSON, though, the whole tree would need to be retrieved even if you're only interested in certain parts. However, if the Merkle tree's structure can be mapped to a BSON document structure, it might allow for partial tree loading. When using BSON, you need to ensure that the data types you use in your Merkle Tree are compatible with BSON serialization. Some data types may not be supported or may need special handling.

Storing SMT

Roughly speaking, Sparse Merkle Trees consist of two types of nodes: filled nodes representing actual data, and zero nodes denoting areas of the tree that are unoccupied or sparse.

For effective persistence of a Merkle Tree in any storage medium, three key functions must be executed:

- Storing nodes

- Fetching nodes

- Creating a Merkle Tree proof

All standart merkle tree functions can be implemented along with these 'key' functions.

Zero nodes

For each level, zero nodes remain constant and can be generated during the initialization of the Merkle Tree.

protected zeroes: Field[];

constructor(height: number) {

this.zeroes = new Array(height);

this.zeroes[0] = Field(0);

for (let i = 1; i < height; i+=1) {

this.zeroes[i] = Poseidon.hash([this.zeroes[i - 1], this.zeroes[i - 1]]);

}

}

Filled nodes

In order to properly store filled nodes, a more advanced approach is needed. As a rule of thumb, every digest must be accompanied by metadata that outlines its position within the tree. This information will assist in the restoration of the node and its associated proof in the future.

Consider the following as an example of how a node might be depicted in IPLD:

interface IPDLNode {

level: number;

index: string;

hash: Field;

leftChildCID: CID | null;

rightChildCID: CID | null;

}

Merkle Proof

A Merkle Proof forms a vital component of the Merkle Tree.

Consider this general interface:

interface MerkleProof {

sibling: Field;

isLeft: boolean; // isLeft = `index` mod 2 == 0

}

sibling represents the other child of the parent node while isLeft can be determined by taking the modulus of the node's index by 2.

Merkle proofs can be built in two directions:

- from root to leaf

- from leaf to root

When using IPLD, constructing a Merkle proof from root to leaf is a logical approach since the alternative is less efficient due to the need to initially locate the leaf.

Merkle Proof can be used also to add/update leaves.

Time complexity

The time complexity for all operation in a distributed SMT is equal to O(n), where n is the height of the tree.

zkMemory: An Universal Memory Prover in Zero-Knowledge Proof

zkMemory is a powerful building block for creating secure and efficient Zero-Knowledge Virtual Machines (zkVMs). Its modular design allows developers to integrate zkMemory into their zkVM architecture. This component acts as a dedicated memory prover, handling the ZKPs generation for memory operations within the zkVM.

By leveraging zkMemory's modularity, developers can design zkVM with customized instruction sets and architecture, enabling them to tailor the virtual machine to specific needs. This flexibility, coupled with zkMemory's potential efficiency gains in proof generation, paves the way for a new generation of zkVM applications within the Web3 landscape.

Specification

In this chapter we give a brief overview to zkMemory, including its components and functionalities.

zkMemory is a module built by Orochi Network which allows a prover to prove the consistency when reading and writing values in the memory. The problem can be stated as follows:

Consider a memory \(M\). Then for any cell of \(M\), during the course of time, whenever we read from the cell, the read value must be equal to the last time it was written in the same cell.

Proving memory consistency is an important sub-component for handling the correctness RAM program, which can be used to construct zkVMs. Therefore, zkMemory could serve as a powerful library which handle the memory part in zkVMs and helps developers build custom zkVMs tailored to specific needs within the Web3 landscape. We divide this chapter into \(3\) major parts below:

-

In Execution Trace, we describe execution trace, which are used to prove memory consistency from a memory \(M\) and a computation process of \(N\) steps.

-

In Commitment Schemes, we describe several commitment schemes for committing the execution trace that we support.

-

In Memory Consistency Constraints, we show how to prove the consistency of memory given the execution trace and how do we integrate Halo2 for the constraint.

-

In Nova Variants, we show how to prove the consistency of memory with two Nova variants, Nova and SuperNova. This implementation to experiment the capacity of Nova, SuperNova for zkVM.

Execution Trace

We view a memory \(M\) to be an array \(M=(M_1,M_2,\dots,M_m)\). For a computation with \(N\) steps, n each step of a program execution, we could either i) Read a value from \(M_i\) for some \(i \leq m\) or ii) Write a value \(val\) to a cell \(M_i\) for some \(i \leq m\). To capture this reading/writing step, we define an execution trace to be the following tuple

$$(addr,time,op,val)$$

Here, \(addr\) is the address in the cell in the reading/writing process which is in \(\{1,2\dots,m\}\), \(time\) is the time log, \(op \in \{0,1\}\) is the value which determines whether the operation is READ or WRITE, and \(val\) is the value which is written to/read from \(M_{addr}\). We can see that, in the \(i\)-th execution step, the information from the \(i\)-th trace is sufficient to determine the action in reading/writing the value from/to the memory in that step. In the end, after the execution, we obtain we obtain an array of execution traces \((addr_i,time_i,op_i,val_i)_{i=1}^N\). In the next section, we show how to prove memory consistency given the whole execution trace array.

Commitment Schemes

The first step in zkMemory is to commit the memory, then later we prove the correctness of the trace where its commitment serves as the public instance to prevent prover from cheating in the computation process. Later, we employ a ZKP protocol to prove that the execution trace is correct given its commitment. Currently, our zkMemory implementation supports 3 commitment schemes: KZG, Merkle Tree and Verkle Tree. We now give a high overview of these schemes.

KZG Commitment Scheme

KZG is the first commitment scheme we intend to support, which is widely used in various ZKP systems such as Halo2. We have already presented an overview of KZG here, therefore we refer the readers unfamiliar with KZG to the link. Let \(\mathsf{F}\) be a finite field, and let \(\omega\) be a root of \(4\)-th unity. To commit each trace in the trace, we first create a polynomial \(p(x)\) such that:

$$p(1)=addr,~p(\omega)=time,~p(\omega^2)=op,~p(\omega^3)=val$$

After creating the polynomial \(p(x)\), we use the KZG commitment algorithm to commit it which we denote \(\mathsf{com}(p(x))\), and the whole trace \(\mathsf{com}(p_i(x))_{i=1}^n\) is the commitment of the whole execution trace. Later, if needed, we can open any trace in the trace using the techniques in this, which provides constant opening size and verification time.

Merkle Tree Commitment Scheme

Merkle Tree is the second commitment scheme we would support to commit the memory. At a high level, each leaf of a tree is assigned a value, and the value of the parent is equal to the hash of its children. When opening a leaf node, we only need to reveal all the values in the path from the leaf to the root as well as the sibling nodes in the path. The collision resistance property of cryptographic hash functions ensures that the prover cannot produce an invalid opening.

There are two methods for committing memory using Merkle tree. The first method is to commit to the trace, similar like what we did using KZG commitment. However there is a second method, which we commit to the memory cells instead of the trace, where we assign each leaf node to be a memory cell. In each step, we update the commitment from \(C\) to \(C'\) and provide a Verkle proof that \(C\) was indeed updated to \(C'\). This "proof providing" process can be built with a circuit to hide everything except the instances, which are \(C\) and \(C'\). The second method will be used to support memory consistency from IVC-based constructions like the Nova proof system, as currently we are additionally integrating Nova to support memory consistency in our zkMemory module.

Verkle Tree Commitment Scheme

Verkle tree is very similar to Merkle tree, However, there are several benefits of using Verkle tree compared to Merkle tree as follows:

- The number of children of each parent node in Verkle tree is much greater, making the tree have a much lower height when committing the same data.

- In addition, the number of children can be configured to one's desire, making the tree more flexible when using proof systems than Merkle Tree.

Finally, to commit the memory with Verkle tree, we also do the same as Merkle tree, except that we now employ KZG commitment scheme to commit to the children, which enables constant proof size and verification time, which is also another huge benefit compared to Merkle tree according to this.

Memory Consistency Constraints

Handling the Constraints using Sorting Technique

For an array of execution traces \(tr=(addr_i,t_i,op_i,val_i)_{i=1}^n\), one known method to prove the correctness of \(tr\) is to use the sorting technique, which can be found in [FKLO0W21]. At a high level, we create a new array \(tr'\), which is a sorted version of \(tr\). The elements of \(tr'\) are sorted in increasing order by its address, then time log. We prove that the elements of \(tr'\) satisfy some specified constraints, then prove that \(tr\) and \(tr'\) are permutations of each other.

First, let us delve into the details of the constraints in \(tr'\). The constraints are as follows:

$$ \begin{cases} time_i \lt time_{i+1}, \\ (op_{i+1}=1) \lor (addr_i'=addr_{i+1}'), \\ (addr_i' \lt addr_{i+1}' ) \lor ((addr_i'= addr_{i+1}') \land (time_i' \lt time_{i+1}')),\\ (op_{i+1}=1) \lor (val_i'=val_{i+1}') \lor (addr_{i}' \neq addr_{i+1}').\\ \end{cases} $$

Let us explain the above constraints: Recall that in memory consistency, we simply need to prove that, for each memory cell, the value of the current step is equal to the value in the last step it was written. With this idea, we could sort the trace by their address first, then time and check them via the constraint \((addr_i' \lt addr_{i+1}' ) \lor ((addr_i'= addr_{i+1}') \land (time_i' \le time_{i+1}'))\). In this way, we can divide the array into consecutive ''blocks'' where each block contains the traces with the same address. In each block and each trace of it, we consider the time when the value is written (determined by \(val\) and \(op\)). We see that, if the value is written in the current step (\(op=1\)), then there is nothing to consider because we are writing a new value. Otherwise (\(op=0\)) meaning that we are reading from the cell, then the value \(val\) must be equal to the value of the previous trace in the block, and this can be captured by the constraint \( (op_{i+1}=1) \lor (val_i'=val_{i+1}') \lor (addr_{i}' \neq addr_{i+1}').\). Moreover, we need to ensure that the first time we access a memory cell, then the operation must be WRITE, which can be constrained by \( (op_{i+1}=1) \lor (addr_i'=addr_{i+1}')\), meaning that in every first trace in each block, the opcode of the trace must be \(1\). Finally, we need the original array to be sorted in ascending time, which can be captured via the constraint \( time_i \le time_{i+1}\). Thus, these constraints together are sufficient to check the consistency of the memory.

Integrating Halo2

In this Section, we give a brief overview of handling constraints using Halo2. For readers who are new to Halo2, we refer to halo2. With the execution trace, we define our witness table where each row has the following config:

$$(addr,time,op,val,addr',dif,inv,time',op',val')$$

and the constraints (each must be equal to \(0\)) are rewritten in Halo2 as follows:

$$ \begin{cases} C(time_i,time_{i+1})=0, \\ (op_{i+1}-1)\cdot (addr_{i}'-addr_{i+1}')=0, \\ C(addr_{i}'||time_{i}',addr_{i+1}'||time_{i+1}')=0, \\ (op_{i+1}'-1)\cdot (val_{i+1}'-val_i') \cdot ((addr_{i+1}'-addr_{i}') \cdot inv_{i+1} -1)=0. \\ \end{cases} $$

In the constraints above, we denote \(C\) to be the expression such that \(C(a,b)=0\) iff \(a \lt b\). The idea for construction this expression can be found in here. At a high level, we parse \(a\) and \(b\) into \(256\)-bit chunks \((a_i)\) and \((b_i)\) for \(i=\{1,2\dots,32\}\) and look for the smallest \(j\) such that \(a_j \neq b_j\). Hence, it is equivalent to prove that \((b_j-a_j) \in \{1,\dots,255\}\) and \(a_i=b_i\) for all \(1\leq i \leq j-1\). With this, the expression \(C\) can be written in high level as \(C=\mathsf{lookup}(b_j-a_j,\{1,\dots,255\})+\beta \cdot (\sum_{i=1}^{j-1}(b_i-a_i)\cdot \alpha^i )\) where \(\alpha ,\beta\) are random challenges generated by verifier, and \(\mathsf{lookup}(c,\{1,\dots,255\})\) is an expression that returns \(0\) if and only if \(c \in \{1,2\dots, 255\}\). Note that by Schwartz-Zippel lemma, we can see that if \(a \geq b\), then the expression \(C\) above does not return \(0\) with overwhelming probability. In addition, for proving that \(tr\) and \(tr'\) are permutation of each other, we simply use the shuffle API from here

Finally, with the constraints above, we could support proving memory consistency using Halo2. Our implementation for this can be found here.

Nova and SuperNova

For Nova variants [KS22], we don't commit the execution trace to a polynomial, instead we commit value of each Memory cell \(M[add_j]\) in steps circuit to prove the memory consistency.

Given an initial memory \(M\) and a execution trace list \(tr\):

$$ (addr_i,instruction_i,value_i)_{i=1}^N$$

- \(\text{instruction}_i=0\), read \(value_i\) from \(M[addr_i]\)

- \(\text{instruction}_i=1\), write \(value_i\) to \(M[addr_i]\)

Every step \(j^{th}\) must satisfy following constraints:

- \(add_j \Leftarrow |M|\)

- \((instruction_j - 1)\dot(M_j[add_j] - value_j) = 0\)

- \(instruction_j \in \{ 0,1 \}\)

In the implementation, we let \(z_i\) to be the \(i^{th}\) memory state, and the circuit has a witness input, the \(i^{th}\) trace record, consisting of \(addr_i\), \(instruction_i\) and \(value_i\). We also introduce the commitment to the memory at the last cell of \(z_i\) in application where one need to commit to the memory before proving.

Orand: Public Randomness Beacon

Orand leverages a Distributed Verifiable Random Function (DVRF). In this setup, multiple participants collaborate in a distributed computational process to generate a single random value. Each participant contributes to the computation, but no individual can determine the outcome alone. This collective approach ensures that the resultant random value is both unpredictable and unbiased. Importantly, the generated value can be verified by anyone, maintaining transparency and eliminating the need for trust in any single third party, as the randomness's integrity is collectively assured and publicly verifiable.

Orocle: zkOracle Service

Orocle is a oracle service that fetches and verifies off-chain data to be used within blockchain smart contracts. Unlike traditional centralized oracles, Orocle operates on a provable model, reducing the risks associated with single points of failure and enhancing trust through a distributed network of nodes. This service is crafted to provide real-time, accurate data feeds for dynamic applications in the Web3 ecosystem.

Unlocking the Web3 Universe: Orochi Network's Orocle Service

Imagine building a Web3 application that thrives on real-world data, free from centralized control. This is the vision behind Orochi Network's Orocle service, a powerful tool poised to revolutionize the way DApps interact with the external world.

Traditionally, DApps have struggled to access external data sources, relying on centralized oracles – single points of failure susceptible to manipulation and bias. Orochi's Orocle shatters these limitations, offering a decentralized, secure, and versatile solution for feeding accurate and verifiable data into your DApps.

Deployed Platform

Orocle V2 was deployed on following smart contract platform.

Mainnet

Testnet

So, what exactly can the Orochi Orocle (Oracle) do?

- Gather Diverse Data: Access a vast pool of information, from financial markets and weather updates to social media sentiment and IoT sensor readings. The possibilities are endless, empowering DApps with real-time, relevant data.

- Decentralized & Trustworthy: Eliminate the risk of manipulation with a distributed network of nodes verifying and securing data integrity. No single entity controls the flow of information, fostering trust and transparency.

- Highly Scalable & Efficient: Designed to handle high volumes of data requests efficiently, ensuring your DApp performs smoothly even with complex data integrations.

- Chain Agnostic: Integrate seamlessly with various blockchain platforms, offering flexibility and wider reach for your DApp. But why is this important?

The potential applications are vast:

- Decentralized finance (DeFi): Integrate real-world market data for dynamic pricing and risk management in DeFi protocols.

- Prediction markets: Enable accurate and trustworthy predictions based on real-time events and data.